Percent Agreement, Pearson's Correlation, and Kappa as Measures of Inter-examiner Reliability | Semantic Scholar

Revista Brasileira de Ortopedia - Evaluation of the Reliability and Reproducibility of the Roussouly Classification for Lumbar Lordosis Types

Different rates of agreement on acceptance and rejection: A statistical artifact? | Behavioral and Brain Sciences | Cambridge Core

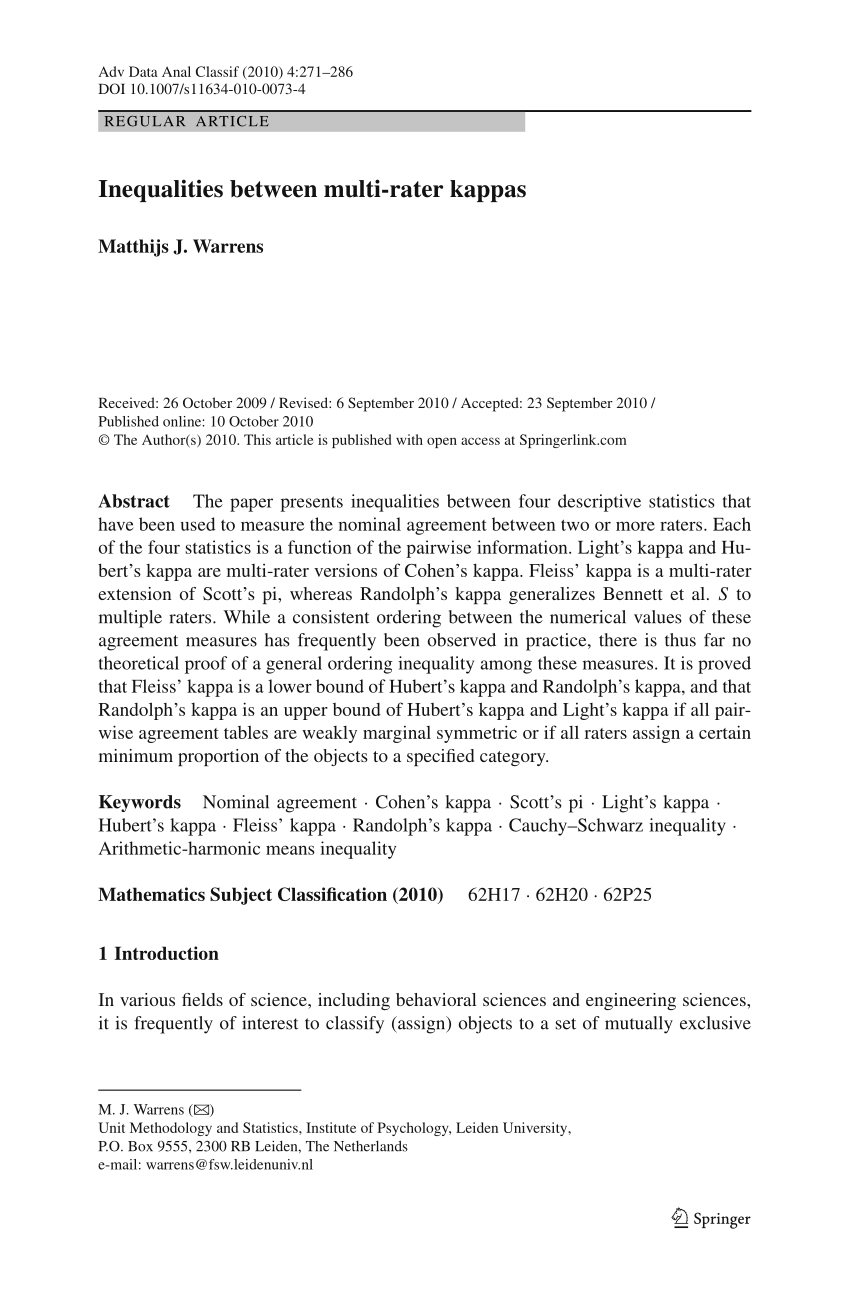

Measuring inter-rater reliability for nominal data – which coefficients and confidence intervals are appropriate? | BMC Medical Research Methodology | Full Text

![PDF] The Reliability of Dichotomous Judgments: Unequal Numbers of Judges per Subject | Semantic Scholar PDF] The Reliability of Dichotomous Judgments: Unequal Numbers of Judges per Subject | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/d03b63208d0cfd7f060ca7dcb872f2e2631febd2/5-Table1-1.png)

PDF] The Reliability of Dichotomous Judgments: Unequal Numbers of Judges per Subject | Semantic Scholar

![PDF] Measurement system analysis for categorical data: Agreement and kappa type indices | Semantic Scholar PDF] Measurement system analysis for categorical data: Agreement and kappa type indices | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/81ea882eebdcab89e5e79a88ea5a2aee162a6630/4-Table1-1.png)

PDF] Measurement system analysis for categorical data: Agreement and kappa type indices | Semantic Scholar

The Equivalence of Weighted Kappa and the Intraclass Correlation Coefficient as Measures of Reliability - Joseph L. Fleiss, Jacob Cohen, 1973

Using appropriate Kappa statistic in evaluating inter-rater reliability. Short communication on “Groundwater vulnerability and contamination risk mapping of semi-arid Totko river basin, India using GIS-based DRASTIC model and AHP techniques ...

![PDF] A scattered CAT: A critical evaluation of the consensual assessment technique for creativity research. | Semantic Scholar PDF] A scattered CAT: A critical evaluation of the consensual assessment technique for creativity research. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/0f2ab3e77a314c0b963868858ffd4646295d63b0/18-Figure1-1.png)