![PDF] A Study on Convolution using Half-Precision Floating-Point Numbers on GPU for Radio Astronomy Deconvolution | Semantic Scholar PDF] A Study on Convolution using Half-Precision Floating-Point Numbers on GPU for Radio Astronomy Deconvolution | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/7ff8896d21d574e499be4cc527fd44d9888b2d42/1-Figure1-1.png)

PDF] A Study on Convolution using Half-Precision Floating-Point Numbers on GPU for Radio Astronomy Deconvolution | Semantic Scholar

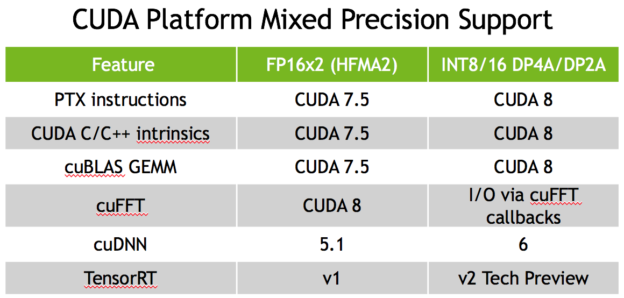

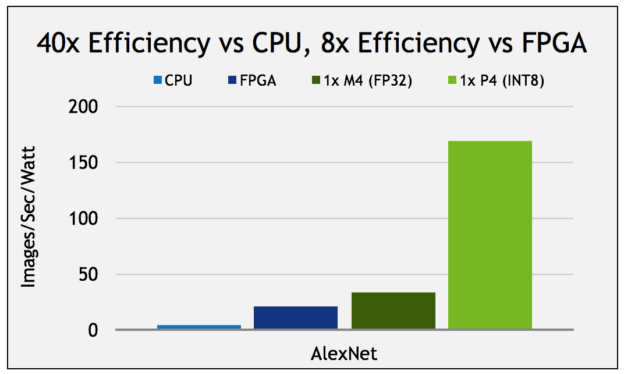

Revisiting Volta: How to Accelerate Deep Learning - The NVIDIA Titan V Deep Learning Deep Dive: It's All About The Tensor Cores

![PDF] A Study on Convolution Operator Using Half Precision Floating Point Numbers on GPU for Radioastronomy Deconvolution | Semantic Scholar PDF] A Study on Convolution Operator Using Half Precision Floating Point Numbers on GPU for Radioastronomy Deconvolution | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/f93daead83667c2173c0814f6479c2b9aa686770/4-Figure4-1.png)

PDF] A Study on Convolution Operator Using Half Precision Floating Point Numbers on GPU for Radioastronomy Deconvolution | Semantic Scholar

Nvidia Unveils Pascal Tesla P100 With Over 20 TFLOPS Of FP16 Performance - Powered By GP100 GPU With 15 Billion Transistors & 16GB Of HBM2

![PDF] A Study on Convolution Operator Using Half Precision Floating Point Numbers on GPU for Radioastronomy Deconvolution | Semantic Scholar PDF] A Study on Convolution Operator Using Half Precision Floating Point Numbers on GPU for Radioastronomy Deconvolution | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/f93daead83667c2173c0814f6479c2b9aa686770/4-Figure2-1.png)

PDF] A Study on Convolution Operator Using Half Precision Floating Point Numbers on GPU for Radioastronomy Deconvolution | Semantic Scholar

NVIDIA Pascal GP100 GPU Expected To Feature 12 TFLOPs of Single Precision Compute, 4 TFLOPs of Double Precision Compute Performance

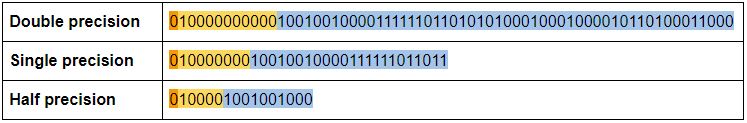

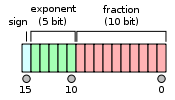

Benchmarking floating-point precision in mobile GPUs - Graphics, Gaming, and VR blog - Arm Community blogs - Arm Community

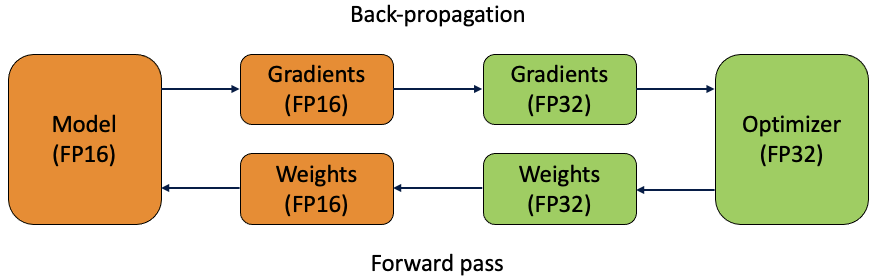

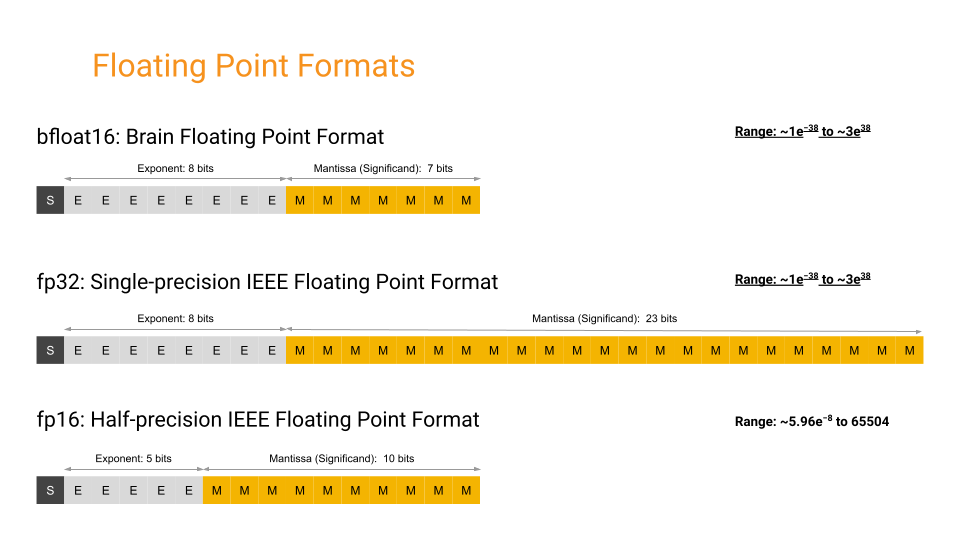

Introducing Faster Training with Lightning and Brain Float16 | by PyTorch Lightning team | PyTorch Lightning Developer Blog