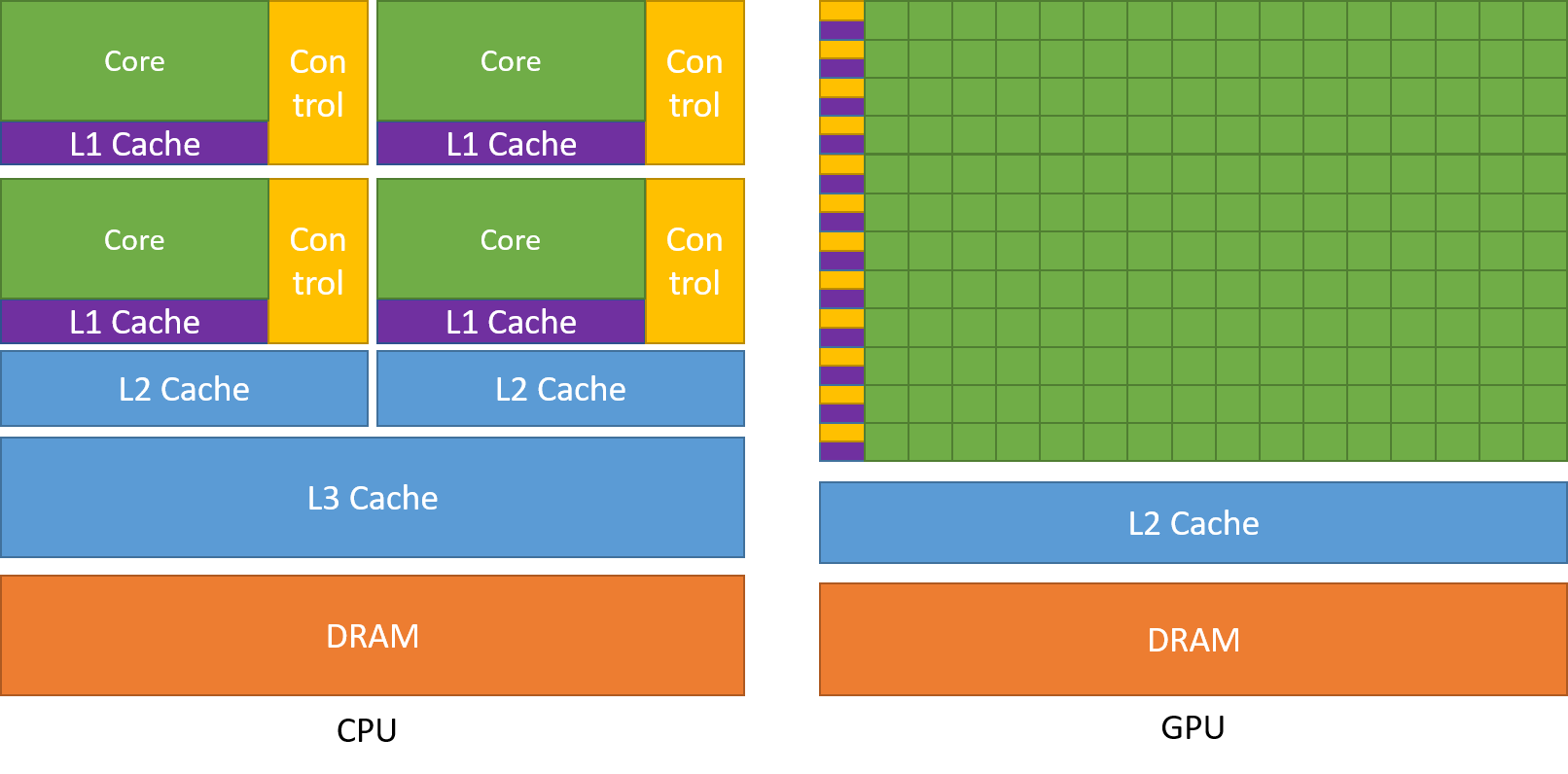

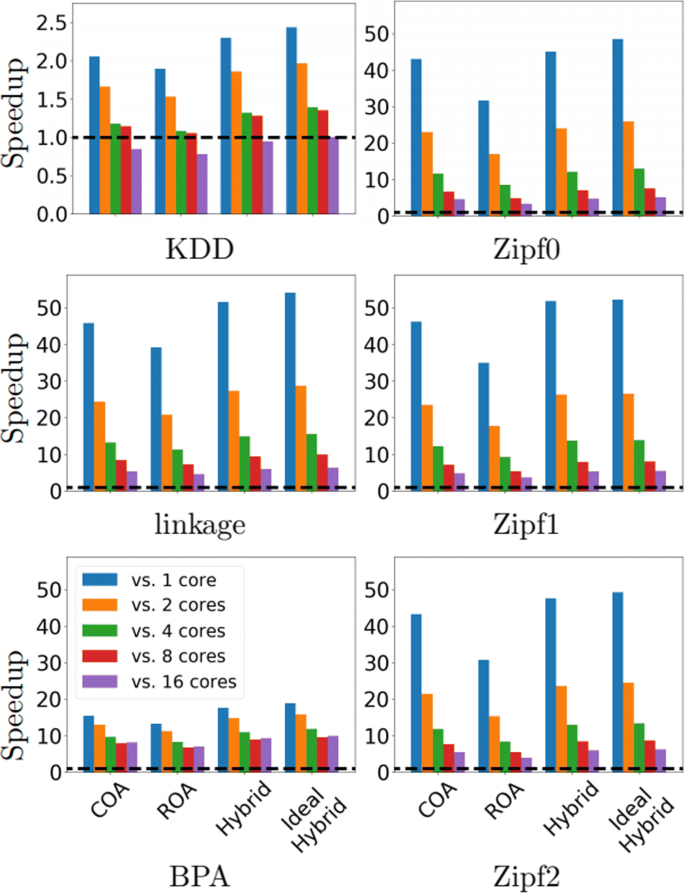

Parallel acceleration of CPU and GPU range queries over large data sets | Journal of Cloud Computing | Full Text

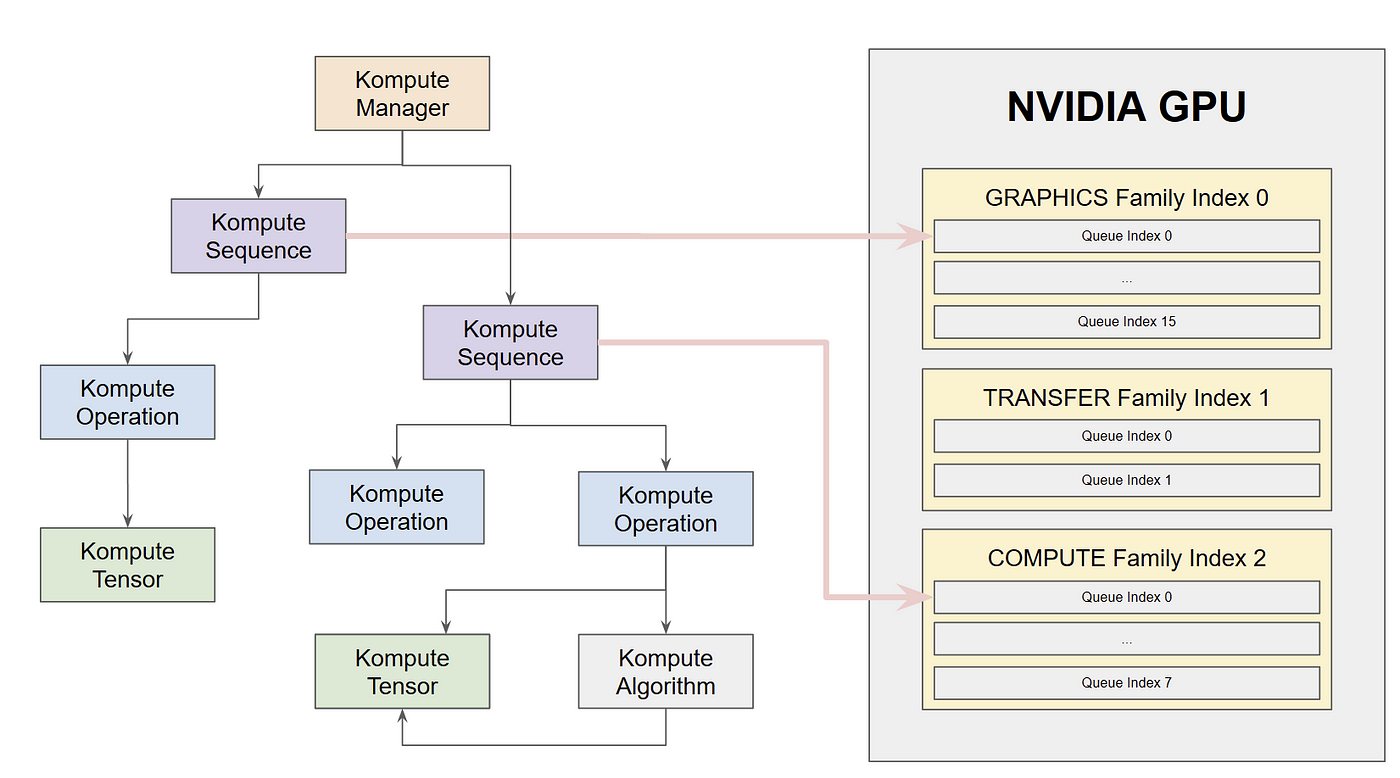

Parallelizing GPU-intensive Workloads via Multi-Queue Operations using Kompute & Vulkan | by Alejandro Saucedo | Towards Data Science

8 GPU Liquid-Cooled GPU Server | 10 GPU Liquid-Cooled NVIDIA A6000, A100, Quadro RTX Server for Deep Learning, GPU rendering and parallel GPU processing. Starting at $32,990. In Stock.

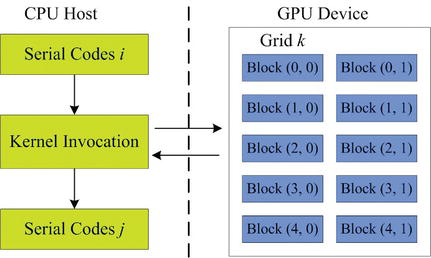

Typical CUDA program flow. 1. Copy data to GPU memory; 2. CPU instructs... | Download Scientific Diagram

![CPU + GPU in parallel loops? | [H]ard|Forum CPU + GPU in parallel loops? | [H]ard|Forum](https://cdn.hardforum.com/data/attachment-files/2017/09/89203_FB_IMG_1504797990455.jpg)